[Originally posted on the BUGGED website]

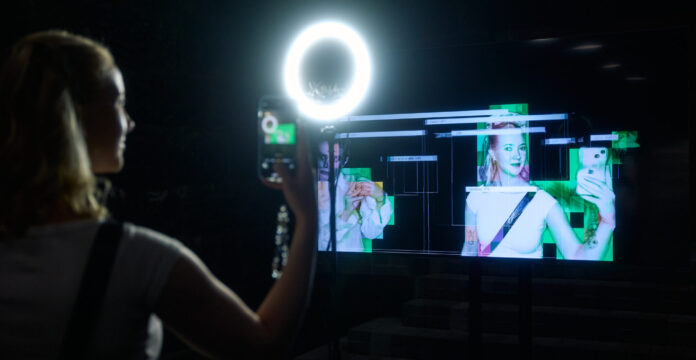

Spooky AI – privacy concerns and algorithms that go ’bump in the night’ is a joint seminar between the BUGGED and SYNTHETICA projects in which we take privacy discussions beyond the creepy to engage in issues causing stress, anxiety and hyper vigilance in everyday technological experience.

Organisers: BUGGED and SYNTHETICA

Place: TF4103 Technobothnia (just next to VME Interaction Design Environment), University of Vaasa, Puuvillakuja 3, Vaasa

Time: 14:00-16:30

14:00-14:10 Opening words

14:10-14:50 Keynote – “Super-Augmentation: Exploring the Intersections of AI, Hyper-Personalised Data, and Society”, Associate Professor of Communication Toija Cinque, Critical Digital Infrastructures and Interfaces (CDII) Research Group, Deakin University, Melbourne, Australia

14:50-15:05 Coffee

15:05-15:40 Short talks from the BUGGED and SYNTHETICA teams

15:40-16:10 Experiential mirrors – empathy mirror exercise on privacy experience

16:10-16:20 Whole group reflections

16:20-16:30 Busting the ghosts – which proton-zapper next?

********

KEYNOTE TITLE: “Super-Augmentation: Exploring the Intersections of AI, Hyper-Personalised Data, and Society”

Synopsys

From a Western perspective, the historical evolution of Large Language Models (LLMs) like ChatGPT and image generators such as DALL·E, Midjourney and OpenAI’s soon-to-be released Sora unfolds along a continuum of thinking that oscillates between hype and scandal. In rapid time ChatGPT- 4, released by OpenAI, became available in approximately 188 countries, following its initial launch in November 2022. Meanwhile, Microsoft’s Copilot, launched in November 2023, is accessible in the U.S., North and South America, the U.K and throughout Asia including South-East Asian regions. Google’s Bard, introduced in May 2023, has reached over 180 countries and territories. The updated ChatGPT-4o and emerging LLMs represent a significant advancement in the field of Artificial Intelligence (AI), harnessing the power of massive datasets to offer insights and advice across a broad spectrum of subjects. Built on the foundation of copious amounts of text, much of which is sourced from the internet, these models possess the remarkable ability to engage in human-like conversations on a vast array of topics. Their capacity to process, synthesise, and generate language-based responses has made them invaluable tools (or soon will) in both educational and professional contexts. However, it is important to approach their outputs with a critical eye. This work speculates on what this might mean for users at the individual level of everyday interactions with connected screen devices for the data collected and curated by and for us by ‘agents’ capable of more complex ‘reasoning’ than current personal apps like Siri or Alexa. By drawing on key infrastructures, users engage with big and small data in hitherto unprecedented detail. Data interpellates us. Yet data is obscure and enigmatic.