BUGGED INTERVIEW SERIES 01

Thoughts from Anne Scott Wilson

At VME we house a family of research projects across the spectrum of any form of human-computer interaction related topic you can think of. We also see a fruitful relationship between our internal projects and those we both encounter or are causally involved with. This blog post reports activities of the Academy of Finland funded BUGGED project that were enriched with the mobility experience enabled by the European Commissions funding of the OpenInnoTrain project.

During my OpenInnoTrain Secondment at RMIT, Melbourne, I had the privilege to meet numerous scholars and practitioners across the disciplines, city, and institutions. In an effort to expand our research of the BUGGED (Emotional Experience of Privacy and Ethics in Persuasive Everyday Systems, Academy of Finland) I have asked my new acquaintances to give their thoughts on issues of privacy and GDPR from their perspective as researchers. Here, I report the thoughts and feelings of fellow artist and academic, Anne Scott Wilson from Deakin University, Melbourne, Australia.

In the following you will read Anne’s insights.

Rebekah: What does the GDPR mean to you as a scholar?

Anne: The relationship between automated online systems and myself as a scholar is at times very efficient in facilitating complex cross platform/informational sections in a moment. Continuous surveillance by way of data collection of categories of emails, the calendar and other AI measuring tools invades one’s sense of safety, cracking open the actual nuances that fill the everyday routines in online exchange.

Microsoft now gives alerts if an email hasn’t been answered for a few days. While this seems helpful we know this information could be aggregated and used against us without any notion of the reasons why.

Rebekah: How do questions of privacy emerge within your discipline?

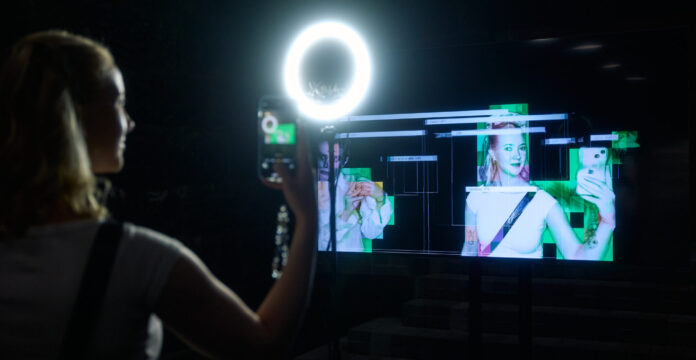

Anne: Particularly in photography – where once ‘street photography’ was an example, it is now a contested area. Photographs can no longer be taken without consent (at the same time Google maps provide images of people who have not consented and online databases are full of imagery that is non-consensual. Also, these images are simply taken for machine training. While on the one hand, privacy is protected through photography and its ethical standards, online imagery is “access all areas”.

Personally, in my profession as an artist, use of Instagram and social media, LinkedIn etc. are the face I expose to the world rather than a more curated form previously. While to some this could be perceived as opening up opportunities, I feel its extra pressure, and success is biased towards how things look on the screen and abridged quick tags to identify aspects of practice. Privacy is thus an issue as it has to do with identity and by extension this is moderated and formed through social media.

Rebekah: What do you feel we’re being protected from in relation to data privacy?

Anne: I feel corporations, large institutions and government bodies strategically ‘protect’ your information. However, each body has a strategy that may or may not align with another large body, e.g., government and corporations. I feel identity theft is the main thing we are being protected from but at the same time this is no comfort as information doesn’t seem to be aligned, and can be harvested, and is harvested, and reduced into decisions made at high levels of government. Chat GPT and its visual counterparts are invading privacy as an artist and utilizing imagery already online – this could mean an image of an artwork that has taken years to develop, while none of that information is relevant to the harvester, it is important to a sense of self/as an artist. Thus, the veil between public and private is porous and continuously being eroded in a way. What cannot be harvested is aspects of the physical – though you could argue organs are harvested. These aspects which could be called ‘spiritual’ are the last bastion of privacy and is a threatened species.

Rebekah: What types of bodily sensations do you feel when you think about yourself within the web of data surveillance?

Anne: I feel fear in my chest and anxiety in the brain – also a sense of foreboding in my whole body.

Rebekah: Where do you feel the border lies between basic curiosity and stalking?

Anne: Basic curiosity is no longer a thing in my opinion. Because through ChatGPT and other search functions the words and images that train AI are lumped together. So, for example, looking up the work of an artist will also open you to whatever has been captured about their private life, about what anyone may have commented on or written about their work. It comes at once together.

Rebekah: Is there any point to advocating data privacy in the era of surveillance economy and machine learning?

Anne: This is an excellent question and probably not. NFTs (Non-fungible tokens), although hackable, seem to be more reliable in keeping information discreet – i.e., block chains as I understand it. Each country will need to structure and re-structure the information from the past and try to protect it from being used in different ways. It seems impossible.

These were Anne’s thoughts on data privacy and new protective regulations, and innovations, emerging in the dawn of AI. If you’re interested in learning more about Anne’s practice, please click here. Also, VME has the pleasure of hosting Anne as artist-in-residence during October, 2023. If you’re curious about her work, and want to experience the exciting developments she’s creating on campus at the University of Vaasa, please contact me at Rebekah.rousi@uwasa.fi for a more detailed programme.